#include <loader.h>

Classes | |

| struct | Metadata |

| The metadata consists of the ServableId. More... | |

Public Member Functions | |

| virtual | ~Loader ()=default |

| virtual Status | EstimateResources (ResourceAllocation *estimate) const =0 |

| virtual Status | Load () |

| virtual Status | LoadWithMetadata (const Metadata &metadata) |

| virtual void | Unload ()=0 |

| virtual AnyPtr | servable ()=0 |

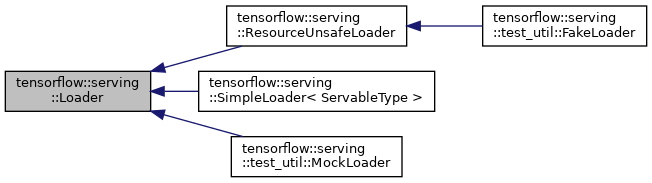

Detailed Description

A standardized abstraction for an object that manages the lifecycle of a servable, including loading and unloading it. Servables are arbitrary objects that serve algorithms or data that often, though not necessarily, use a machine-learned model.

A Loader for a servable object represents one instance of a stream of servable versions, all sharing a common name (e.g. "my_servable") and increasing version numbers, typically representing updated model parameters learned from fresh training data.

A Loader should start in an unloaded state, meaning that no work has been done to prepare to perform operations. A typical instance that has not yet been loaded contains merely a pointer to a location from which its data can be loaded (e.g. a file-system path or network location). Construction and destruction of instances should be fairly cheap. Expensive initialization operations should be done in Load().

Subclasses may optionally store a pointer to the Source that originated it, for accessing state shared across multiple servable objects in a given servable stream.

Implementations need to ensure that the methods they expose are thread-safe, or carefully document and/or coordinate their thread-safety properties with their clients to ensure correctness. Servables do not need to worry about concurrent execution of Load()/Unload() as the caller will ensure that does not happen.

Constructor & Destructor Documentation

◆ ~Loader()

|

virtualdefault |

Member Function Documentation

◆ EstimateResources()

|

pure virtual |

Estimates the resources a servable will use.

IMPORTANT: This method's implementation must obey following requirements, which enable the serving system to reason correctly about which servables can be loaded safely:

- The estimate must represent an upper bound on the actual value.

- Prior to load, the estimate may include resources that are not bound to any specific device instance, e.g. RAM on one of the two GPUs.

- While loaded, for any devices with multiple instances (e.g. two GPUs), the estimate must specify the instance to which each resource is bound.

- The estimate must be monotonically non-increasing, i.e. it cannot increase over time. Reasons to have it potentially decrease over time

- Returns

- an estimate of the resources the servable will consume once loaded. If the servable has already been loaded, returns an estimate of the actual resource usage.

Implemented in tensorflow::serving::SimpleLoader< ServableType >, and tensorflow::serving::ResourceUnsafeLoader.

◆ Load()

|

inlinevirtual |

Fetches any data that needs to be loaded before using the servable returned by servable(). May use no more resources than the estimate reported by EstimateResources().

If implementing Load(), you don't have to override LoadWithMetadata().

Reimplemented in tensorflow::serving::test_util::FakeLoader, and tensorflow::serving::SimpleLoader< ServableType >.

◆ LoadWithMetadata()

|

inlinevirtual |

Similar to the above method, but takes Metadata as a param, which may be used by the loader implementation appropriately.

If you're overriding LoadWithMetadata(), because you can use the metadata appropriately, you can skip overriding Load().

Reimplemented in tensorflow::serving::SimpleLoader< ServableType >.

◆ servable()

|

pure virtual |

Returns an opaque interface to the underlying servable object. The caller should know the precise type of the interface in order to make actual use of it. For example:

CustomLoader implementation:

class CustomLoader : public Loader {

public:

...

Status Load() override {

servable_ = ...;

}

AnyPtr servable() override { return servable_; }

private:

CustomServable* servable_ = nullptr;

};

Serving user request:

ServableHandle<CustomServable> handle = ... CustomServable* servable = handle.get(); servable->...

If servable() is called after successful Load() and before Unload(), it returns a valid, non-null AnyPtr object. If called before a successful Load() call or after Unload(), it returns null AnyPtr.

Implemented in tensorflow::serving::test_util::FakeLoader, and tensorflow::serving::SimpleLoader< ServableType >.

◆ Unload()

|

pure virtual |

Frees any resources allocated during Load() (except perhaps for resources shared across servables that are still needed for other active ones). The loader does not need to return to the "new" state (i.e. Load() cannot be called after Unload()).

Implemented in tensorflow::serving::test_util::FakeLoader, and tensorflow::serving::SimpleLoader< ServableType >.

The documentation for this class was generated from the following file:

- tensorflow_serving/core/loader.h